Agari has made significant investment into infrastructure as code. Almost two years into this project, we’ve learned some lessons. In my previous blog post, I discussed organization of your automation repository and parameterizing environments. For this post, I’d like to talk about state management and database automation.

One of the most frustrating things about working with Terraform is managing state, which Terraform stores in a terraform.tfstate file. The solution you choose is highly dependent on your scale and the topology of your infrastructure. Typically, we maintain separate state files per product and environment for a total of four, not counting one-off development and integration environments. The terraform_remote_state resource allows the state produced by one config to be consumed by another downstream configuration – this allows you to create a multi-layered infrastructure if you need to, and use a variety of storage mechanisms such as Consul, Atlas or S3. It might make sense to separate management of state depending on the lifecycle and change frequency of environments. Also, don’t be afraid to occasionally hand-edit tfstate if things get out of sync or you otherwise run into trouble. The format is pretty easy to comprehend (if predictably verbose).

Infrastructure automation gets hairy when it comes to your databases, but it’s also very important! Replication, sharding, fail-over, backup and migration all need to be repeatable and testable. So, don’t do it. Use RDS, use DynamoDB, use someone else’s database and trust them to run it for you. You’re far more likely to introduce instability or cause outright failure than someone who is selling a database-as-a-service. Database automation is very difficult and you will probably screw it up.

Unfortunately, unless you’re lucky enough to be able to architect the systems you’re going to be responsible for ex nihilo, you’re probably going to have to deal with a legacy database system or three. The very first thing I always check when encountering a database in the wild is replication.

Not to be conflated with backup, a streaming replica is a read-only copy of your database, continuously updated from the primary node. If your primary node fails, the replica can be promoted in-place to be a replacement for the failed primary and take over from the most recent transaction it processed. Another common use-case is distributing reads to alleviate load on the primary; you can have multiple read replicas for this purpose.

At Agari, we use a package called repmgr to manage replication on our PostgreSQL clusters. We’ve found it to be very helpful in managing state. We’ve forked Modcloth’s repmgr Ansible role which configures that and manages our failover process as well. repmgrd on the standby node monitors the health of the primary node. When it detects a failure, it shoots the other node in the head via an Amazon API call to stop the instance and takes over the Elastic IP, which points to the current primary node. There’s a small amount of lag associated with the EIP disassociate/reassociation, but typically this processes in under 30 seconds which is acceptable for our use case. We’re working on moving to using Consul’s service discovery functionality instead of AWS EIP which should eliminate the latency issues. Post-failover, there are still some manual steps to convert the previous primary node into a new streaming replica but in the future, we’ll probably automatically provision a new replica from scratch. It’s worth having a post-failover process in place to do some root cause analysis on the failure but the more manual steps we can remove from the process the better.

Replication is not backup. No matter how many redundant nodes you have in your database cluster, no matter how robust your sharding configuration, all it takes is one developer who swears up and down they thought they were on the staging system not production. Little Bobby Tables over there is going to need some help. This is where having automated, testable point-in-time backup comes in.

For PostgreSQL, there are several options for backup tools. We’ve experimented with barman – which could not keep up with the size of our databases, custom EBS snapshot backups and wal-e. Although EBS snapshots have some distinct advantages with regard to restore time, we’ve standardized on wal-e. A cron job runs once every 24 hours to push a base backup to S3 and we have the PostgreSQL archive command set up to compress and archive each WAL segment to an S3 bucket, which is configured with a lifecycle policy to delete files older than three days.

Test your backups! Ideally in an automated way as part of a CI pipeline, but at the very least try a restore every time any major changes are made to the backup system or the database configuration. Backup restore failovers are often tied to database size exceeding some arbitrary threshold so test early and test often.

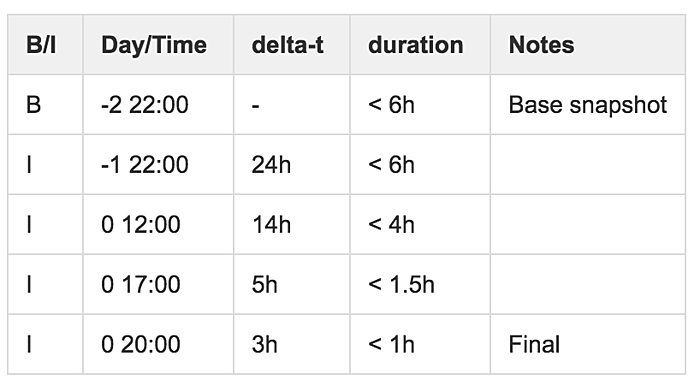

Lastly, I wanted to touch on something we learned while experimenting with EBS snapshot-based backup systems. If you can force your database into a consistent state on disk, do an atomic disk snapshot and resume processing transactions without data loss. This can be very effective as a means of moving databases between say, AWS regions or accounts or migrating from a legacy system to a newly provisioned system. Although our systems can typically tolerate minor interruptions to the datastore writers, the sheer size of some individual volumes on database nodes is such that an EBS snapshot can potentially take upwards of 14 hours. However! EBS snapshots are atomic and need to only store the delta of change between the current and most recent previous snapshot. The upshot is that we can do a little bit of math, take a series of some somewhat precisely timed snapshots and get the time down to getting a mountable snapshot EBS volume down to a few minutes instead of several hours.

As you can see, the incremental snapshots take significantly less time. Of course, this is all completely dependent on how much you’re writing to the datastore. You’ll have to run your own experiments to figure out your timing.